Contributors

Related Articles

Stay Up To Date

Something has gone wrong, check that all fields have been filled in correctly. If you have adblock, disable it.

The form was sent successfully

A multiyear compute super-cycle is underpinning AI’s rapid advance. Surging model scale and inference workloads are colliding with scarce advanced fabrication and packaging capacity, creating durable pricing power and high barriers to entry across the infrastructure stack—an ecosystem potentially poised to deliver long-term value for investors.

Key Points

- Explosive growth in AI model training and inference workloads is driving a multiyear surge in demand for data-center and accelerator capacity.

- Constrained supply of leading-edge fabrication and advanced packaging has enabled a small group of suppliers to capture the majority of market share.

- A tightly integrated hardware-software ecosystem creates high barriers to entry and entrenched competitive moats, which we believe can generate long-term value for our clients.

Artificial intelligence (AI) continues to climb the proverbial wall of worry, confronting recurring bouts of skepticism over its practical use cases and return on investment. This pattern is hardly new. In the early 1980s, observers dismissed the personal computer as a niche curiosity, quipping that the PC would remain a toy unless it could find a compelling business application.1

Decades later, others continued to downplay the smartphone’s potential, questioning whether consumers would pay premium prices for miniature handheld devices. And long before that, IBM executives viewed the mainframe as a mature technology with limited upside. In each case, history proved the skeptics wrong. Platforms once derided as marginal have gone on to reshape industries, create new ecosystems, and reward patient capital with outsized returns.2

At Sands Capital, we believe AI is poised to repeat this arc. Beneath the surface of periodic controversy and exaggerated headlines lies a technology whose core capabilities are scaling at an astonishing pace. As these capabilities accelerate, we anticipate an explosion of practical applications that will rival, or even surpass, the revolutions brought about by PCs and smartphones.

In the sections that follow, we explore the three fundamental “scaling frontiers” driving AI’s rapid ascent, survey the empirical benchmarks that demonstrate its progress, assess the ramifications for use-case proliferation, examine the milestones that demonstrate its progress, and finally, examine the infrastructure investments that we believe are set to benefit from this transformative wave.

scaling intelligence

AI Scaling Laws

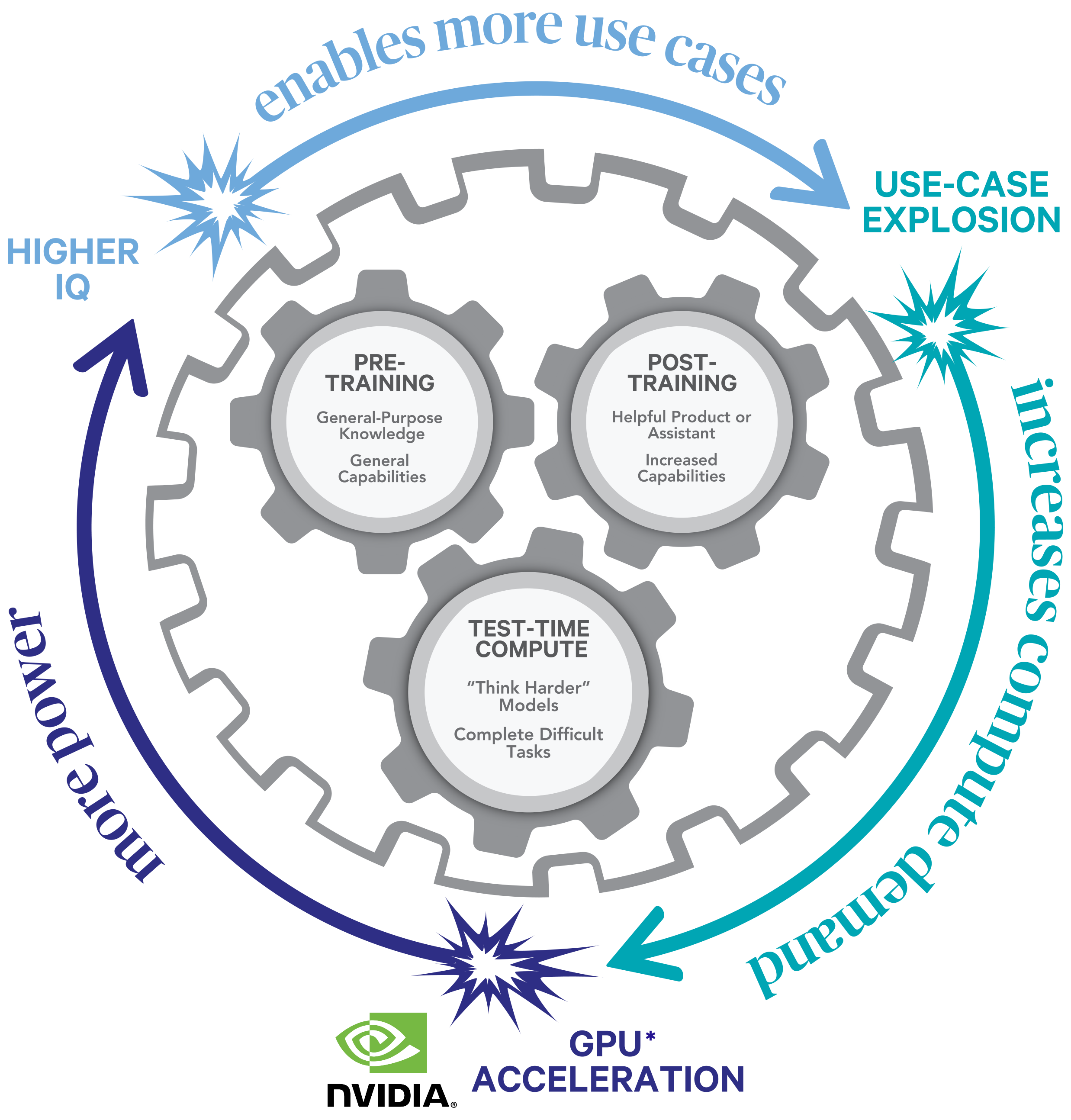

The dramatic improvements in AI over the past several years can be traced to advances along three interlocking dimensions of scale. The first dimension, pre-training, imbues the model with general-purpose knowledge and capabilities. Next, post-training molds the pre-trained model into a helpful and useful product while continuing to increase its capabilities. The final dimension, test-time compute allows the model to ‘think harder’ and complete more difficult tasks.

The Bedrock of Modern Language and Vision

Pre-training constitutes the bedrock of modern language and vision models. In this phase, a model digests an ever-expanding body of content including text, images, audio, and even video, all while learning to predict the next token—whether a word, pixel, or code snippet—based on statistical patterns in the data. As models increased in size (represented by the number of parameters they contain) they were able to learn deeper and more nuanced patterns from the data, making them significantly more accurate and useful for downstream tasks. Early breakthrough models, such as Google’s BERT and OpenAI’s GPT-1, relied on architectures trained with tens to hundreds of millions of parameters. Today, state-of-the-art models like GPT-4.5 boast hundreds of billions to trillions of parameters and incorporate novel techniques to learn efficiently from their training data sets. These larger, more sophisticated models exhibit qualitatively different behavior: they can sustain coherent narratives across increasingly long horizons, perform translation across dozens of languages, and generate increasingly large amounts of code by inferring intent from context. Crucially, as model size and dataset scale have climbed, so too has performance—often following a power-law relationship whereby each 10x increase of compute yields a consistent percentage improvement in accuracy or loss.

Post-training

Pre-training lays the groundwork for raw knowledge and model capabilities but does not produce alignment with human objectives nor a useful end-user experience. In short, the model is primed and full of potential but does not know how to behave. The first generation of popular models, such as ChatGPT, Claude, and Gemini, relied on two techniques: supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) to enable models to follow instructions more faithfully, avoid harmful or biased content, and maintain conversational coherence. While these two methods have different technical implementations, they both rely on the same crucial ingredient: carefully curated examples of good output that was painstakingly crafted by humans.

One of the major breakout ideas of 2025 is a new post-training technique called reinforcement learning with verifiable rewards (RLVR), which does not require any human in the loop. In this setup, models are given access to systems that can automatically grade their outputs so that they can learn from trial and error. Examples of such systems include math questions and proofs, software engineering tasks, and data manipulation problems. Current research efforts are undoubtedly trying to extend this capability to other domains where the idea of ‘correctness’ is more difficult to define. Early signs of progress include OpenAI’s deep research product, which learned to scour the internet and create compelling research reports. The implication of these breakthroughs is clear: unburdened by the bottleneck of human feedback, we expect leading labs to scale this axis of training just as they have done with pre-training to continue to improve model capabilities.

Test-time compute (Inference)

The final scaling frontier lies in test-time compute, which is sometimes referred to as “thinking time.” Just as a human’s reasoning often improves with additional deliberation, AI systems can refine their outputs by expending more compute per query. There are many ways to implement test-time scaling. For example, some systems operate by generating many candidate answers simultaneously and in parallel and then choosing the best answer. Another approach is to have the model generate longer and longer ‘chains of thought’ in which the model deliberates, backtracks, and continually refines its answer until it is satisfied. These processes, while computationally intensive, yield higher quality, deeper insights, and a marked reduction in hallucinations and logical errors. As inference hardware becomes more powerful and cost-efficient thanks to specialized AI accelerators, tensor-core graphics processing units (GPUs), and novel memory architectures, prospects for real-time, high-quality reasoning in mission-critical applications are rapidly moving from science fiction to commercial reality.

What is the Evidence?

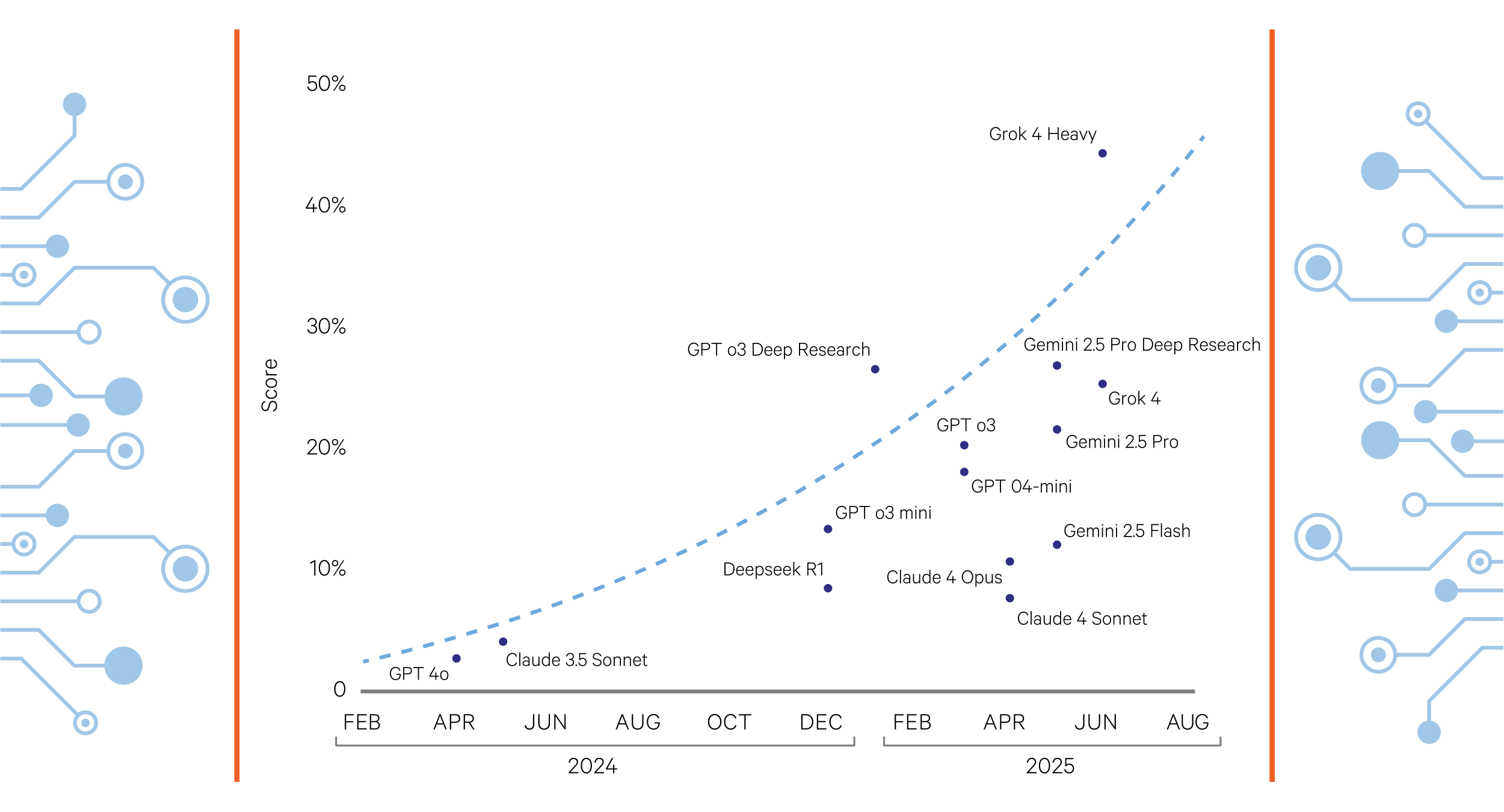

We believe empirical benchmarks leave little doubt that AI capabilities are improving at an extraordinary clip.

ARC-AGI leaderboard

The abstraction and reasoning corpus (ARC) challenges artificial intelligence models to solve visual puzzles that require abstract reasoning and generalization rather than pattern memorization. Since the June 2024 launch of the ARC Prize, top submissions have significantly improved performance on the benchmark. On the ARC-AGI-1 private evaluation set, the best-performing model achieved a task completion rate of 55.5 percent, up from a baseline of approximately 33 percent, representing an approximately 34 percent relative reduction in error rate.3

These gains were not primarily driven by larger training datasets or brute-force scaling, but by advances in program synthesis, architectural innovation, and more efficient test-time learning strategies, demonstrating how compute-efficient design can achieve superior results in domains that demand complex reasoning. This trajectory highlights the growing importance of scaling laws not only in raw model size, but also in computational efficiency and abstraction ability.

“Humanity’s Last Exam”

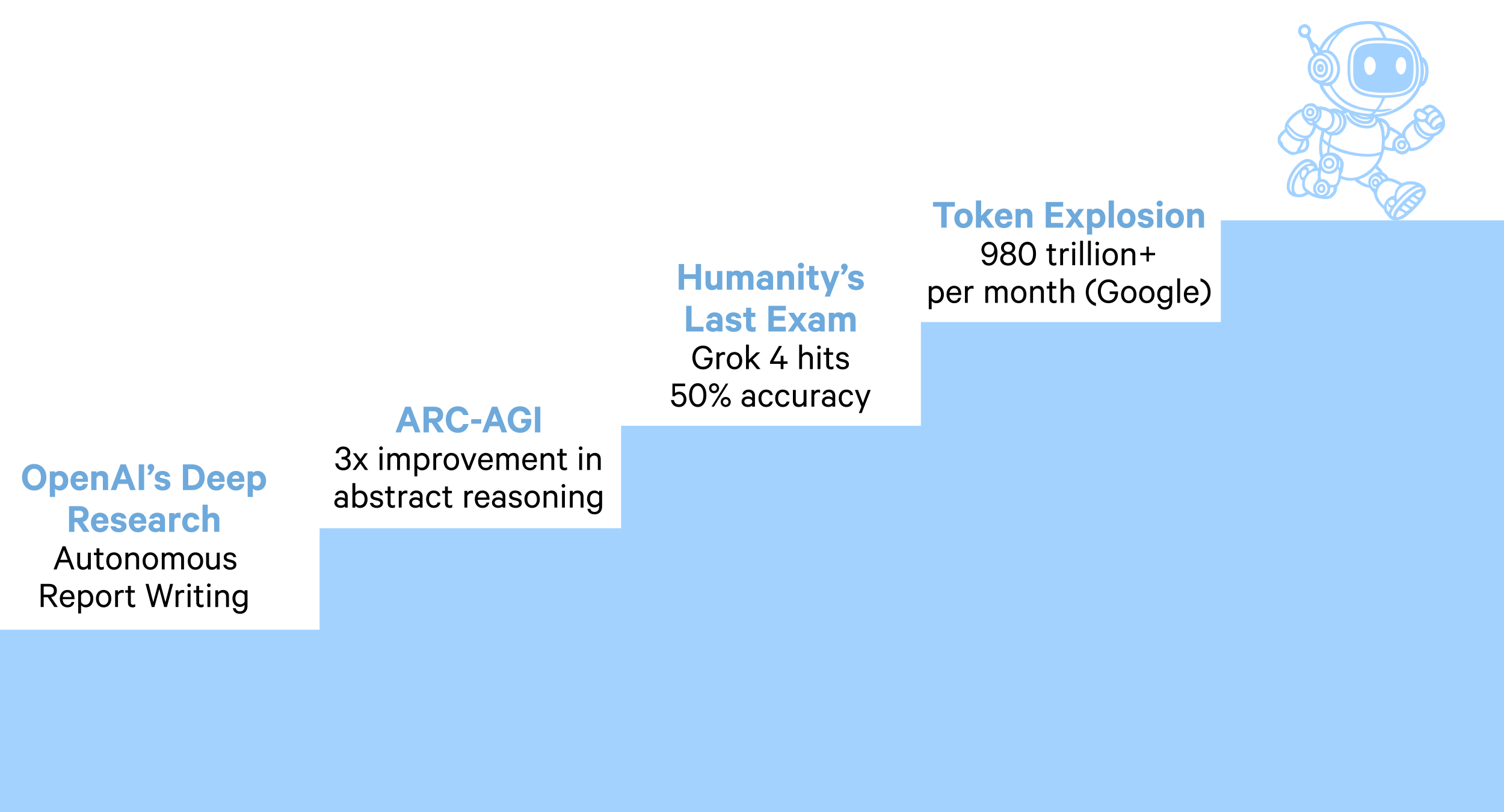

Hosted by leading AI research consortia, this benchmark comprises 2,700 expert-crafted questions spanning mathematics, science, and the humanities. In late 2023, top models barely cracked 10 percent accuracy; by mid-2025, we saw Grok hit 45 percent, propelled by improvements in both pre-training quality and inference methodologies. This swift ascent, measured in months rather than years, demonstrates that AI systems may be rapidly approaching thresholds once thought to demarcate human-level understanding.

AI Systems Rapidly Approach Human-Level Understanding

These benchmarks validate the three scaling frontiers and provide a concrete basis for anticipating further breakthroughs. If recent trends persist, models trained with next-generation architectures and sprawling compute budgets will soon tackle tasks of even greater abstraction, from legal reasoning to novel scientific discovery.

What are the Ramifications?

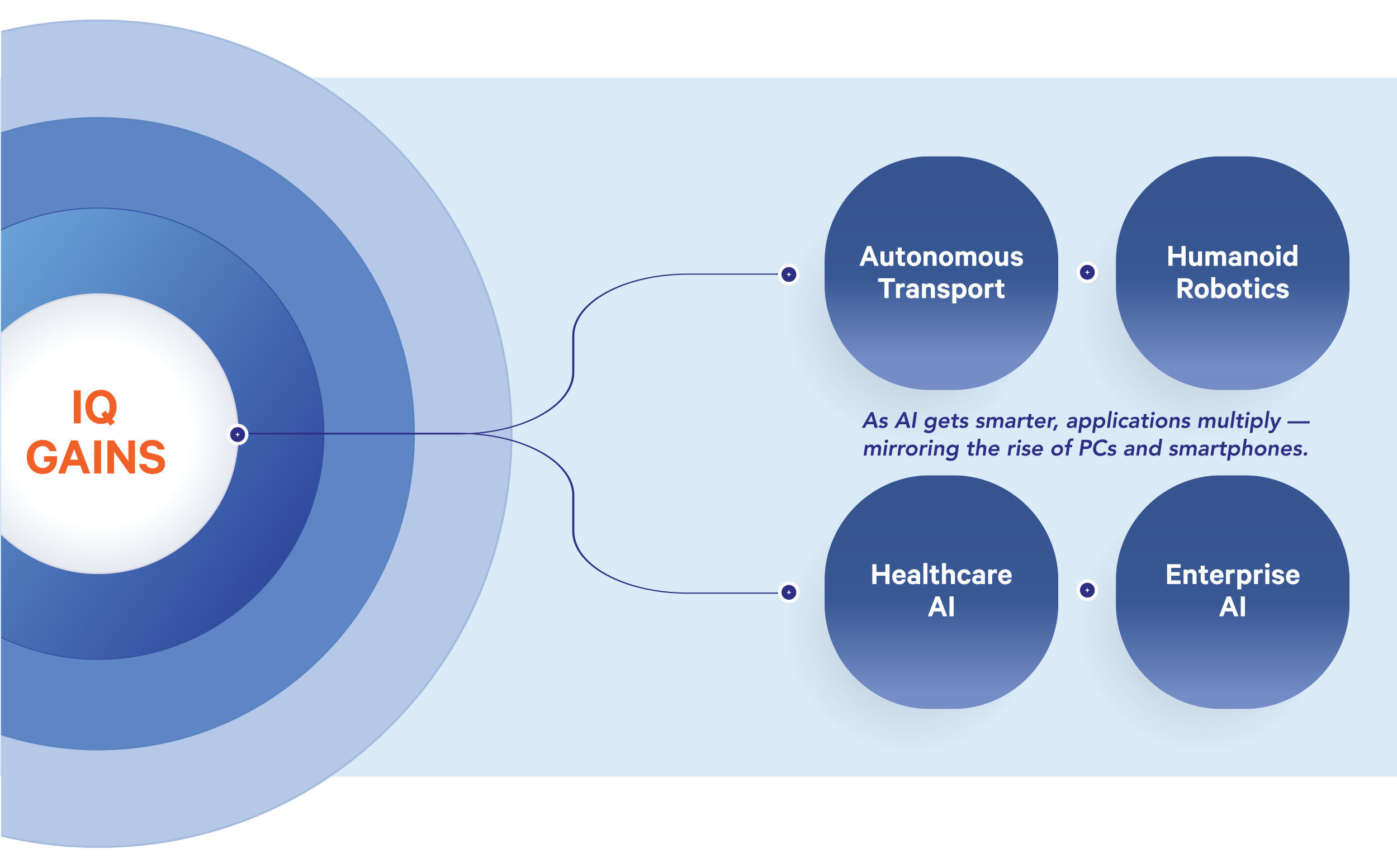

When foundational intelligence expands, applications multiply. We expect AI’s IQ explosion to unleash a corresponding use-case explosion, mirroring the trajectories of prior computing eras:

Historical Precedent

In the 1980s, PC software evolved from basic spreadsheets and word processors to thousands of specialized programs within a decade.4 Likewise, Apple’s App Store grew from 500 apps at launch in 20085 to over 2 million by 20166, despite regular purges of outdated or non-compliant listings.7 Each platform’s richer capabilities drew a vast ecosystem of developers eager to address niche and mainstream needs alike.

Use-Case Explosion After iPhone Release

Apple App Store Growth

Source: https://www.statista.com/statistics/268251/number-of-apps-in-the-itunes-app-store-since-2008, https://browser.geekbench.com.

Microservices and Flagships

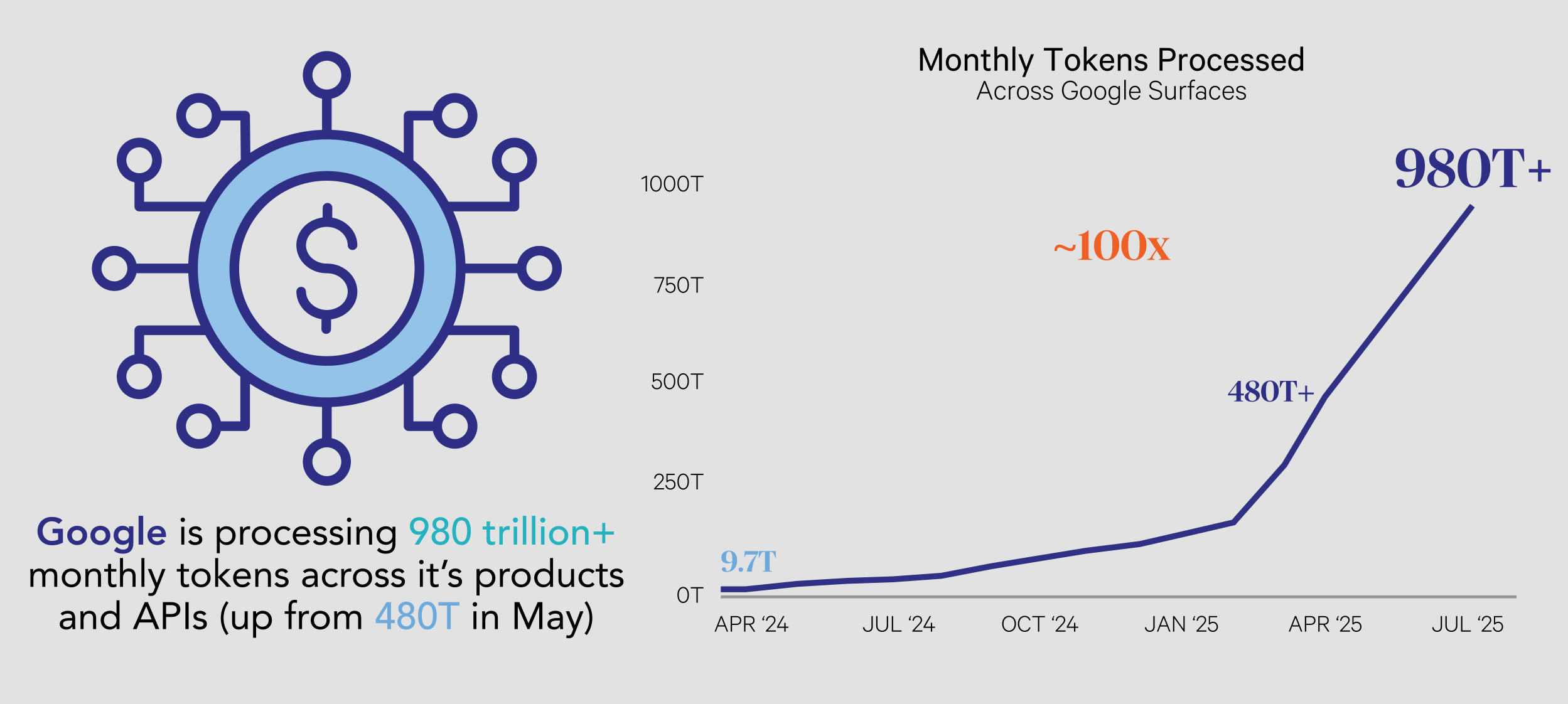

We foresee millions of discrete AI “microservices” solving narrowly defined tasks, everything from personalized tutoring bots to automated legal-document summarizers, alongside a handful of “anchor” platforms (e.g., foundational LLM APIs, enterprise-grade vision SDKs). We can already see this happening today. For example, Google has noted that it had generated more than 980 trillion LLM ‘tokens’ by April 2025 up from 9.7 million in April 2024.

Google Increases AI Monetization

Sector-Specific Inflections

Across industries, we anticipate flagship applications that both demonstrate feasibility and catalyze broader adoption:

- Autonomous transportation

Waymo’s San Francisco robotaxi fleet now accounts for over one-quarter of all rideshare trips in its operational zones, reflecting rapid consumer acceptance and the commercial viability of autonomous services. - Humanoid robotics

Tesla’s Optimus Gen 2 has halved its weight and doubled dexterity relative to its predecessor, moving closer to generalized physical labor applications from warehouse logistics to eldercare assistance. - Enterprise AI

As an enterprise workflow platform, ServiceNow is in a unique position to directly harness the efficiencies enabled by AI. This is evidenced by the growth of its Pro Plus SKU, which since its launch less than two years ago, has passed 250 million in annual contract value and is expected to increase by 300 percent to 1 billion by the end of 2026. - Healthcare AI

Diagnostics startups employing generative models for medical imaging interpretation now achieve accuracy on par with senior radiologists, while AI-driven drug-discovery platforms have reduced candidate screening times by up to 70 percent, accelerating pipelines and lowering development costs.

Use-Cases Explode Across Sectors

Infrastructure Investments: The Compute Super-Cycle

NVIDIA and GPU Accelerators

NVIDIA’s GPU architecture has become the de facto standard for AI workloads, from large-scale model training to real-time inference. Optimized tensor cores, high-speed NVLink interconnects, and a mature software ecosystem (CUDA, cuDNN) deliver step-function gains in performance-per-watt and scalability.

NVIDIA’s strategy hinges on what has come to be known as Huang’s Law—named after founder Jensen Huang—which targets an exponential reduction in the cost of AI computation every few years. This is achieved through aggressive, full-stack optimization across both hardware and software. Much like Moore’s Law once did for transistors, Huang’s Law has become a new benchmark for the AI era, lowering the cost per compute and unlocking the ability to train increasingly sophisticated models.

This dynamic has a powerful flywheel effect. As compute costs fall, the scale and complexity of AI models rise, broadening real-world applicability across industries. This proliferation of use cases amplifies demand for NVIDIA’s chips. In essence, NVIDIA is generating its own future demand by systematically reducing the barrier to AI adoption.

HUANG’s LAW: GPUs MORE THAN DOUBLE EVERY TWO YEARS

Taiwan Semiconductor and Advanced Foundry Capacity

Taiwan Semiconductor Manufacturing (TSMC) stands at the epicenter of the AI infrastructure boom. As the world’s leading pure-play foundry, TSMC, in 2025, will likely lead total smartphone SoC shipments with an 87 percent share in the 5nm and below (3nm and 2nm) nodes and is expected to grow to 89 percent by end of 2028, according to Counterpoint Research.8 It will thus be considered the exclusive source for the most advanced logic processes required by today’s AI accelerators. This scale confers not just market leadership but durable pricing power: when demand for cutting-edge nodes outstrips capacity, we believe customers are likely to pay premium rates to secure wafer allocations.

Taiwan Semiconductor’s exposure to AI is both deep and accelerating. Under CEO C.C. Wei’s guidance, the company expects its revenue from AI accelerators to double again in 2025 after already tripling in 2024, and to grow at a mid-40 percent CAGR through 2029.9 We believe the company’s overall revenue targets of 20 percent CAGR over the same period can be achieved with AI-related compute chips as the primary growth engine for business.

To meet this surge, TSMC is investing $38 billion to $42 billion in capital expenditure for 2025, with a material portion earmarked for advanced packaging (CoWoS) and new 2nm & 3 nm capacity—both crucial for high-performance compute and power efficiency in AI chips. Simultaneously, TSMC is deepening its U.S. footprint: its Arizona fabs, now completing their first test runs for customers, such as NVIDIA, AMD, and Apple, will ramp to volume production in 2026 and eventually add tens of thousands of wafer starts per month. Backed by federal incentives and strategic partnerships, this U.S. expansion increasingly insulates TSMC, and by extension, its AI-chip customers, from geopolitical uncertainty and export-control risks.

Today, TSMC’s AI growth is mainly focused on AI data-center chips from NVIDIA and Broadcom. Yet, as noted above, as model capabilities continue to increase new markers should open including self-driving vehicles, AI smartphones, enterprise AI and humanoids. Each of these would become a sizeable market for leading edge chips and would further accelerate TSMC’s revenue growth.

In sum, TSMC’s combination of technological leadership, scale economies, and strategic capex commitments makes it, in our view, the definitive beneficiary of the compute super-cycle—and a core building block for any portfolio seeking exposure to AI’s defining infrastructure transformation.

Broadcom: Networking and Custom Silicon

Broadcom has leveraged its core expertise in high-performance networking to become indispensable in AI data centers.

Its Jericho and Tomahawk switch families optimize GPU-to-GPU interconnects, reducing latency and improving throughput in disaggregated architectures. Coupled with custom application-specific integrated circuits, spanning storage controllers, security accelerators, and programmable packet gateways, Broadcom now captures over 10 percent of the global AI-semiconductor market.

Beyond hardware, Broadcom’s infrastructure-software franchise (mainframe, server virtualization and Linux-kernel security products) adds high-margin, recurring revenue streams that amplify free-cash-flow conversion. We believe company’s disciplined capital allocation—prioritizing high-return buybacks and targeted M&A—should further enhance per-share economics as AI compute capex accelerates.

Supporting Ecosystems

Beyond silicon, ancillary infrastructure—liquid cooling systems, power-delivery modules, high-bandwidth optical interconnects, and data-center real estate—is expected to expand in tandem. We estimate global data-center power demand tied to AI workloads could triple by 2030, driving long-cycle investments in renewable generation, substation upgrades, and energy-efficient building design.10

Collectively, these trends point to a multiyear capex super-cycle in compute infrastructure. Companies that combine technological leadership with scale and customer stickiness—whether in accelerator design, foundry services, or critical materials supply—are uniquely positioned to capture this wave, in our view.

Use Cases Surpassing Past Paradigm Shifts

Artificial intelligence is scaling across pre-training, reinforcement learning, and inference at rates unmatched by prior computing paradigms. We believe empirical benchmarks confirm that each doubling of compute yields outsized gains in both raw capability and cost efficiency. As “machine IQ” climbs, it unleashes a use-case explosion that mirrors or even surpasses the revolutions of PCs and smartphones, spawning millions of microservices alongside flagship platforms that redefine entire industries.

Underpinning this transformation is a voracious demand for compute infrastructure—from GPUs and custom AI accelerators to advanced nodes, packaging, and data-center support systems. We believe institutional investors who align portfolios with the structural leaders in this ecosystem—those with proprietary technologies, scale advantages, and entrenched customer relationships—stand to reap durable growth and compelling returns over the coming decade.

AI’s rapid advance has ignited a compute super-cycle, in which exponential growth in model scale and inference workloads meets scarce fabrication capacity and industry leading chip system design capacity. This dynamic grants a select group of semiconductor leaders durable pricing power, margin tailwinds, and entrenched moats. As enterprises and sovereigns ‘replatform’ critical systems around AI, we believe the combined strength of these pioneers in accelerators, foundry processes, interconnects, and chipmaking equipment will continue to drive value.

At Sands Capital, our disciplined, research-driven approach integrates bottom-up diligence with a top-down view of capital flow into AI compute. By focusing on companies that sit at the intersection of cutting-edge model innovation and the foundational hardware that powers it, we aim to capture the full arc of the AI opportunity, delivering long-term value for our clients in this defining technological era.

1 https://www.bbc.com/news/business-47802280

2 https://www.inc.com/business-insider/boss-doesnt-understand-technology-mocks-trend-wrong.html

3 MindsAI submission achieved 55.5% on the private ARC-AGI-1 evaluation set, with baseline estimated at ~33%,” according to ARC Prize 2024 Technical Report, January 8, 2025, https://arxiv.org/abs/2412.04604

4 Paul Freiberger and Michael Swaine, Fire in the Valley: The Birth and Death of the Personal Computer, 3rd ed. (New York: Apress, 2014).

5 Brian X. Chen, “Five Years of the App Store,” Wired, July 8, 2013, https://www.wired.com/2013/07/five-years-of-the-app-store/.

6 Apple Inc., “Apple Previews iOS 10,” Apple Newsroom, June 13, 2016, https://www.apple.com/newsroom/2016/06/13-apple-previews-ios-10-biggest-release-ever/.

7 Sarah Perez, “Apple to Begin Purging Outdated Apps from the App Store Next Month,” TechCrunch, September 1, 2016, https://techcrunch.com/2016/09/01/apple-to-begin-purging-outdated-apps-from-the-app-store-next-month/

10 https://www.mckinsey.com/featured-insights/sustainable-inclusive-growth/charts/data-center-demands

Disclosures:

The views expressed are the opinion of Sands Capital and are not intended as a forecast, a guarantee of future results, investment recommendations or an offer to buy or sell any securities. The views expressed were current as of the date indicated and are subject to change. This material may contain forward-looking statements, which are subject to uncertainty and contingencies outside of Sands Capital’s control. Readers should not place undue reliance upon these forward-looking statements. All investments are subject to market risk, including the possible loss of principal. Recent tariff announcements may add to this risk, creating additional economic uncertainty and potentially affecting the value of certain investments. Tariffs can impact various sectors differently, leading to changes in market dynamics and investment performance. There is no guarantee that Sands Capital will meet its stated goals. Past performance is not indicative of future results. A company’s fundamentals or earnings growth is no guarantee that its share price will increase. Forward earnings projections are not predictors of stock price or investment performance, and do not represent past performance. There is no guarantee that the forward earnings projections will accurately predict the actual earnings experience of any of the companies involved, and no guarantee that owning securities of companies with relatively high price to earnings ratios will cause a portfolio to outperform its benchmark or index. GIPS Reports found here.

Unless otherwise noted, the companies identified represent a subset of current holdings in Sands Capital portfolios and were selected on an objective basis to illustrate examples of the range of companies involved AI’s expected next wave of infrastructure growth. They were selected to reflect holdings that continue to enable or potentially benefit from the adoption of generative artificial intelligence across multiple geographies.

Company logos and website images are used for illustrative purposes only and were obtained directly from the company websites. Company logos and website images are trademarks or registered trademarks of their respective owners and use of a logo does not imply any connection between Sands Capital and the company.

As of July 31, 2025, Alphabet, Amazon, Apple, Broadcom, Microsoft, NVIDIA, Open AI, and Taiwan Semiconductor Manufacturing were holdings in Sands Capital strategies.

Any holdings outside of the portfolio that were mentioned are for illustrative purposes only.

The specific securities identified and described do not represent all the securities purchased, sold, or recommended for advisory clients. There is no assurance that any securities discussed will remain in the portfolio or that securities sold have not been repurchased. You should not assume that any investment is or will be profitable. A full list of public portfolio holdings, including their purchase dates, is available here.

Notice for non-US Investors.

References to “we,” “us,” “our,” and “Sands Capital” refer collectively to Sands Capital Management, LLC, which provides investment advisory services with respect to Sands Capital’s public market investment strategies, and Sands Capital Alternatives, LLC, which provides investment advisory services with respect to Sands Capital’s private market investment strategies, which are available only to qualified investors. As the context requires, the term “Sands Capital” may refer to such entities individually or collectively. As of October 1, 2021, the firm was redefined to be the combination of Sands Capital Management and Sands Capital Alternatives. The two investment advisers are combined to be one firm and are doing business as Sands Capital. Sands Capital operates as a distinct business organization, retains discretion over the assets between the two registered investment advisers, and has autonomy over the total investment decision-making process.

Information contained herein may be based on, or derived from, information provided by third parties. The accuracy of such information has not been independently verified and cannot be guaranteed. The information in this document speaks as of the date of this document or such earlier date as set out herein or as the context may require and may be subject to updating, completion, revision, and amendment. There will be no obligation to update any of the information or correct any inaccuracies contained herein.